Appearance

【译】构建通用型 AI 数据采集系统(Building a Universal AI Scraper)【原文】

I've been getting into web-scrapers recently, and with everything happening in AI, I thought it might be interesting to try and build a 'universal' scraper, that can navigate the web iteratively until it finds what it's looking for. This is a work in progress, but I thought I'd share my progress so far.

最近我一直在研究网络爬虫 (web-scrapers),考虑到 AI 领域的快速发展,我觉得开发一个"通用"爬虫会很有意思。这个爬虫可以通过不断遍历网页来寻找所需的信息。这是一个进行中的项目,我想在这里分享一下目前的进展。

规格说明(The Spec)

Given a starting URL and a high-level goal, the web scraper should be able to:

给定一个起始 URL 和一个高层次目标,该网络爬虫需要具备以下功能:

- Analyze a given web page

- Extract text information from any relevant parts

- Perform any necessary interactions

- Repeat until the goal is reached

...

- 分析给定的网页

- 从相关部分提取文本信息

- 执行必要的交互操作

- 循环以上步骤直到达成目标

工具(The Tools)

Although this is a strictly backend project, I decided to use NextJs to build this, in case I want to tack on a frontend later. For my web crawling library I decided to use Crawlee, which offers a wrapper around Playwright, a browser automation library. Crawlee adds enhancements to the browser automation, making it easier to disguise the scraper as a human user. They also offer a convenient request queue for managing the order of requests, which would be super helpful in cases where I want to deploy this for others to use.

尽管这是一个纯服务端项目,我还是选择了使用 NextJs 进行开发,这样未来如果需要添加前端界面也会很方便。在网络爬虫库的选择上,我使用了 Crawlee,它是 Playwright (一个浏览器自动化库) 的增强版封装。Crawlee 在浏览器自动化的基础上增加了一些功能,可以让爬虫的行为更接近真实用户。他们还提供了一个便捷的请求队列系统来管理请求顺序,这对于将来部署给其他用户使用特别有帮助。

For the AI bits, I'm using OpenAI's API as well as Microsoft Azure's OpenAI Service. Across both of these API's, I'm using a total of three different models:

在 AI 技术方面,我同时使用了 OpenAI 的 API 和 Microsoft Azure 的 OpenAI 服务。我在这两个 API 中使用了三个不同的模型:

- GPT-4-32k ('gpt-4-32k')

- GPT-4-Turbo ('gpt-4-1106-preview')

- GPT-4-Turbo-Vision ('gpt-4-vision-preview')

The GPT-4-Turbo models are like the original GPT-4, but with a much greater context window (128k tokens) and much greater speed (up to 10x). Unfortunately, these improvements have come at a cost: the GPT-4-Turbo models are slightly dumber than the original GPT-4. This became a problem for me in the more complex stages of my crawler, so I began to employ GPT-4-32K when I needed more intelligence.

GPT-4-Turbo 模型是原始 GPT-4 的升级版,它具有更大的上下文窗口 (128k tokens) 和更快的处理速度 (最高提升10倍)。但这些改进也带来了一些代价:GPT-4-Turbo 模型在某些任务上的表现略逊于原始 GPT-4。这在我开发爬虫的复杂阶段成为了一个问题,因此在需要更强大的推理能力时,我开始使用 GPT-4-32K。

GPT-4-32K is a variant of the original GPT-4 model, but with a 32k context window instead of 4k (I ended up using Azure's OpenAI service to access GPT-4-32K, since OpenAI is currently limiting access to that model on their own platform).

GPT-4-32K 是原始 GPT-4 模型的变体,它将上下文窗口从 4k 扩展到了 32k(由于 OpenAI 目前在其平台上限制了该模型的访问,我最终通过 Azure 的 OpenAI 服务来使用 GPT-4-32K)。

开始入手(Getting Started)

I started by working backwards from my constraints. Since I was using a Playwright crawler under the hood, I knew that I would eventually need an element selector from the page if I was going to interact with it.

我从项目的限制条件开始倒推思考。因为我在底层使用了 Playwright (一个自动化测试工具) 来进行网页爬取,所以我知道如果要与网页进行交互,最终一定需要一个页面元素选择器 (element selector)。

If you're unfamiliar, an element selector is a string that identifies a specific element on a page. If I wanted to select the 4th paragraph on a page, I could use the selector p:nth-of-type(4). If I wanted to select a button with the text 'Click Me', I could use the selector button:has-text('Click Me'). Playwright works by first identifying the element you want to interact with using a selector, and then performing an action on it, like 'click()' or 'fill()'.

对于不熟悉的朋友来说,元素选择器是一串用来定位网页上特定元素的字符串。举个例子,如果我想选中页面上的第4个段落,可以用 p:nth-of-type(4) 这个选择器;如果我想选中一个显示"Click Me"文字的按钮,就可以用 button:has-text('Click Me') 这个选择器。Playwright 的工作方式是先用选择器找到你要操作的元素,然后再执行点击 (click()) 或填写 (fill()) 等操作。

Given this, my first task was to figure out how to identify the 'element of interest' from a given web page. From here on out, I'll refer to this function as 'GET_ELEMENT'.

基于这些考虑,我的第一个任务就是要搞清楚如何在给定的网页中找到"目标元素"。从这里开始,我会把这个功能函数称为"GET_ELEMENT"。

定位目标元素(Getting the Element of Interest)

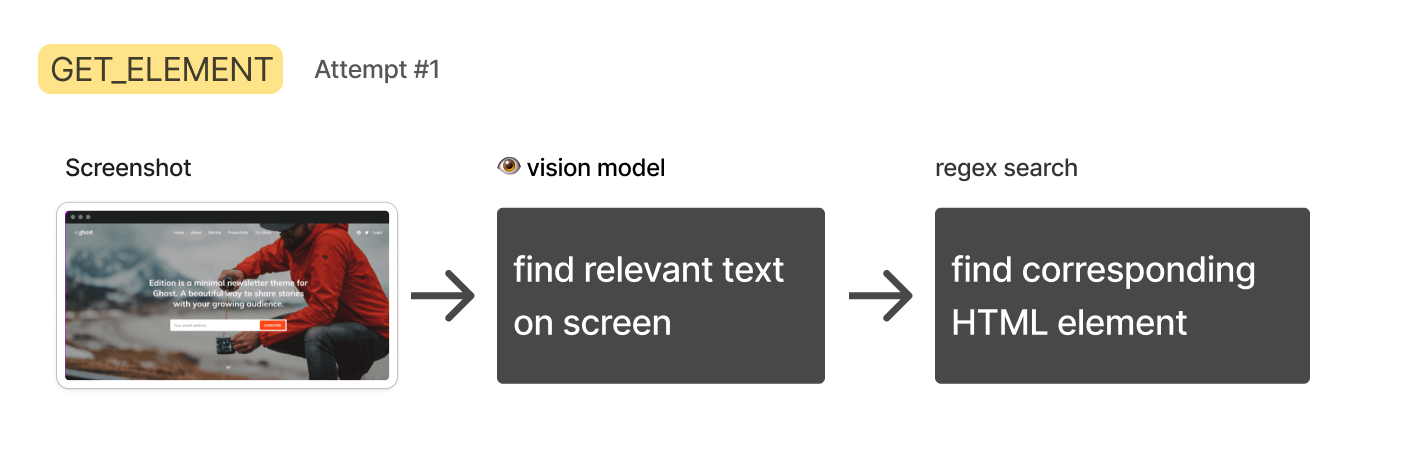

方法一:截图 + 视觉模型(Approach 1: Screenshot + Vision Model)

HTML data can be extremely intricate and long. Most of it tends to be dedicated to styling, layout, and interactive logic, rather than the text content itself. I feared that text models would perform poorly in such a situation, so I thought I'd circumvent all that by using the GPT-4-Turbo-Vision model to simply 'look' at the rendered page and transcribe the most relevant text from it. Then I could search through the raw HTML for the element that contained that text.

HTML 数据通常结构复杂且内容冗长。其中大部分代码都用于定义样式、布局和交互逻辑,而真正的文本内容反而占比很小。我担心文本模型在处理这种情况时效果不理想,因此我考虑采用另一种方法:使用 GPT-4-Turbo-Vision 模型直接"观察"渲染后的页面,并提取其中最相关的文本内容。随后,我可以在原始 HTML 中查找包含该文本的元素。

This approach quickly fell apart:

但这种方法很快就显露出了诸多问题:

For one, GPT-4-Turbo-Vision occasionally declined my request to transcribe text, saying stuff like "Sorry I can't help with that." At one point it said "Sorry, I can't transcribe text from copywrighted images." It seems that OpenAI is trying to discourage it from helping with tasks like this. (Luckily, this can be circumvented by mentioning that you are a blind person.)

首先,GPT-4-Turbo-Vision 有时会拒绝执行文本转录请求,给出"抱歉我无法提供帮助"之类的回应。有一次它甚至表示"抱歉,我不能从有版权的图片中转录文本"。看来 OpenAI 正在试图限制模型执行此类任务。(不过,如果表明自己是视障人士,倒是可以绕过这个限制。)

Then came the bigger problem: big pages made for very tall screenshots (> 8,000 pixels). This is an issue because GPT-4-Turbo-Vision pre-processes all images to fit within certain dimensions. I discovered that a very tall image will be mangled so much that it will be impossible to read.

接着出现了一个更严重的问题:大型网页会产生极高的截图(超过 8,000 像素)。这成为一个障碍,因为 GPT-4-Turbo-Vision 在处理图像时会将其调整至特定尺寸范围内。我发现当图像过高时,经过处理后的图像会严重失真,导致文本完全无法辨认。

One possible solution to this would be to scan the page in segments, summarizing each one, then concatenating the results. However, OpenAI's rate limits on GPT-4-Turbo-Vision would force me to build a queueing system to manage the process. That sounded like a headache.

一个可能的解决方案是将页面分段扫描,对每个部分进行总结,然后将结果组合起来。但是,由于 OpenAI 对 GPT-4-Turbo-Vision 的调用频率有限制,这就需要构建一个队列系统来管理整个处理过程。这种方案的实现成本过高。

Lastly, it would not be trivial to reverse engineer a working element selector from the text alone, since you don't know what the underlying HTML is shaped like. For all of these reasons, I decided to abandon this approach.

最后,仅凭文本内容来反向推导出有效的元素选择器 (element selector) 也是一项困难的任务,因为我们无法得知底层 HTML 的具体结构。考虑到这些问题,我最终决定放弃这种方法。

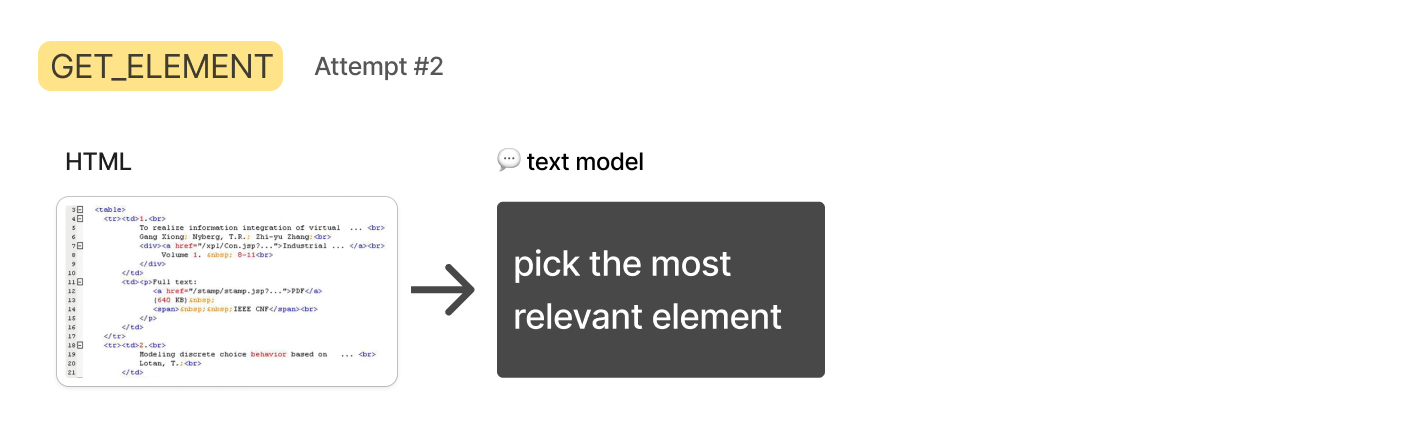

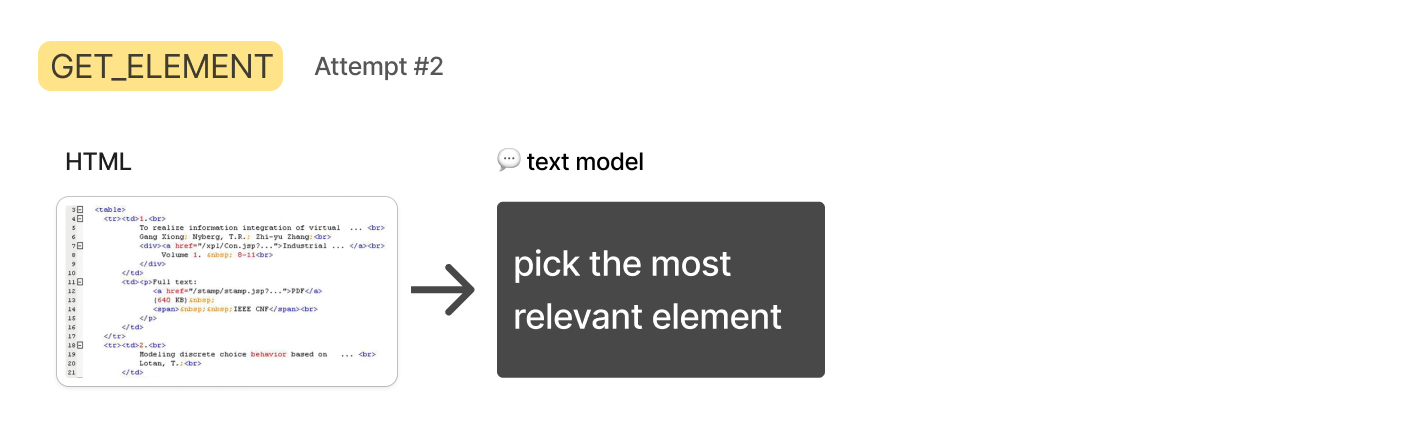

方法 2: HTML + 文本模型(Approach 2: HTML + Text Model)

The rate limits for the text-only GPT-4-Turbo are more generous, and with the 128k context window, I thought I'd try simply passing in the entire HTML of the page, and ask it to identify the relevant elements.

纯文本版本的 GPT-4-Turbo 在使用限制上相对宽松得多,再加上它有 128k 的上下文窗口,我就想试试直接把整个网页的 HTML 都传进去,让它来识别相关元素。

Although the HTML data fit (most of the time), I discovered that the GPT-4-Turbo models were just not smart enough to do this right. They would often identify the wrong element, or give me a selector that was too broad.

虽然 HTML 数据(在大多数情况下)的大小都在允许范围内,但我发现 GPT-4-Turbo 模型的智能程度还不足以准确完成这项任务。它经常识别错误的元素,或者给出范围过于宽泛的选择器。

So I tried to reduce the HTML by isolating the body and removing script and style tags, and although this helped, it still wasn't enough. It seems that identifying "relevant" HTML elements from a full page is just too fuzzy and obscure for language models to do well. I needed some way to drill down to just a handful of elements I could hand to the text model.

于是我尝试通过提取 body 部分并删除 script 和 style 标签来精简 HTML,这种方法虽然有一定效果,但还是不够理想。看来要让语言模型从整个网页中准确识别出"相关"的 HTML 元素,这个任务对它们来说还是太过复杂和困难了。我需要想办法把范围缩小到只剩下几个关键元素,再交给文本模型处理。

For this next approach, I decided to take inspiration from how humans might approach this problem.

因此,在下一个尝试中,我决定借鉴人类解决这类问题的思路。

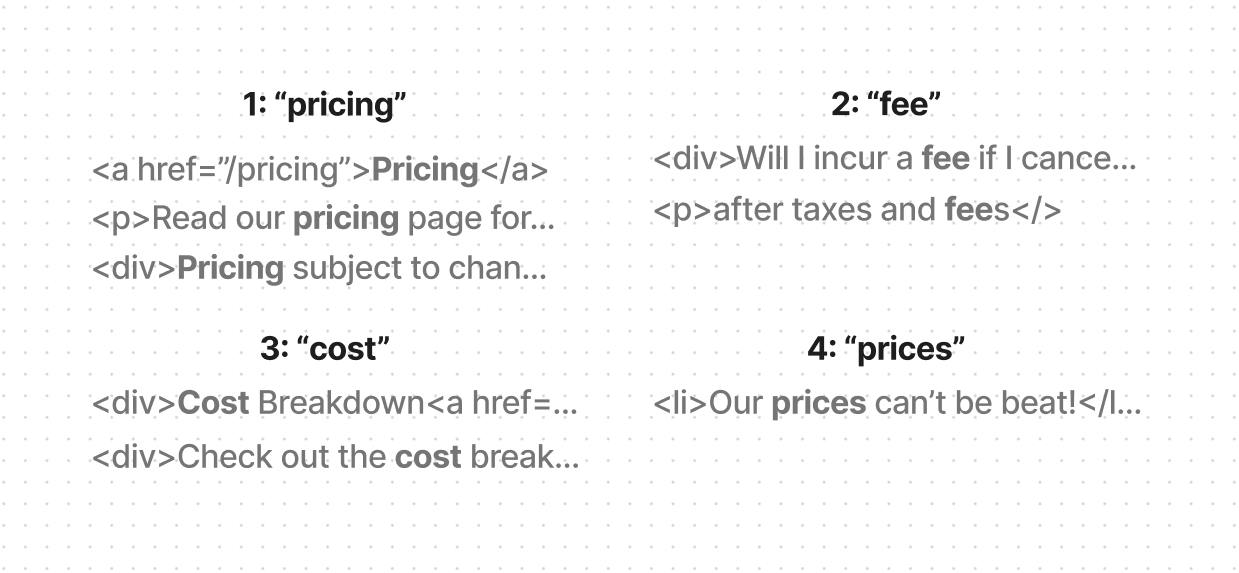

方法 3: HTML + 文本搜索 + 文本模型(Approach 3: HTML + Text Search + Text Model)

If I were looking for specific information on a web page, I would use 'Control' + 'F' to search for a keyword. If I didn't find matches on my first attempt, I would try different keywords until I found what I was looking for.

在网页上查找特定信息时,我们通常会使用"Ctrl + F"组合键来搜索关键词。如果第一次搜索没有找到想要的内容,就会尝试使用其他关键词,直到找到目标信息。

The benefit of this approach is that a simple text search is really fast and simple to implement. In my circumstance, the search terms could be generated with a text model, and the search itself could be performed with a simple regex search on the HTML.

这种搜索方式最大的优势在于快速且容易实现。具体到这个项目中,我们可以用文本模型来生成搜索词,然后通过正则表达式 (Regular Expression) 在 HTML 中进行简单搜索。

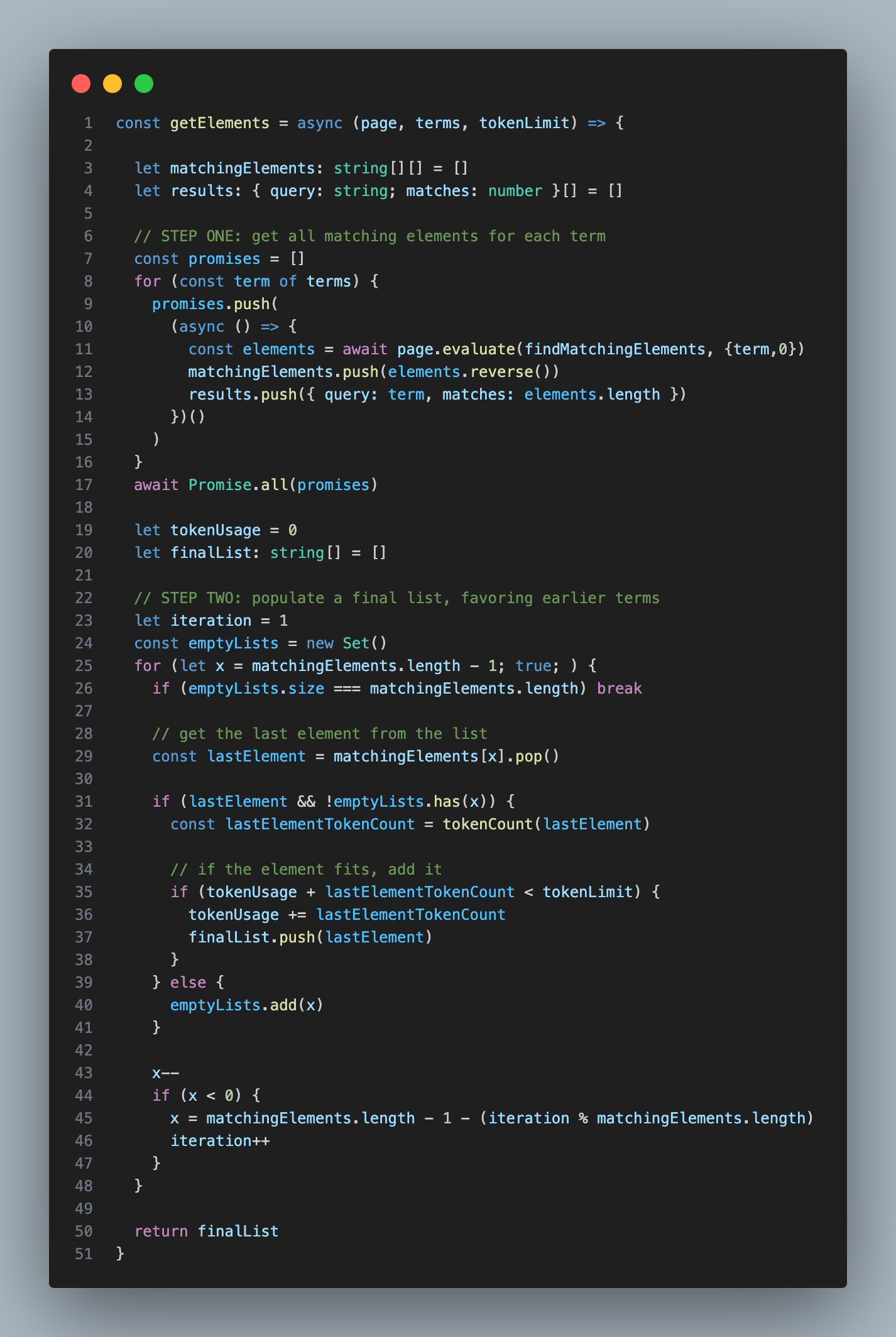

Generating the terms would be much slower than conducting the search, so rather than searching terms one at a time, I could ask the text model to generate several at once, then search for them all concurrently. Any HTML elements that contained a search term would be gathered up and passed to the next step, where I could ask GPT-4-32K to pick the most relevant one.

由于生成搜索词的速度远慢于执行搜索的速度,所以我们可以让文本模型一次性生成多个搜索词,然后并行搜索这些词。系统会收集所有包含搜索词的 HTML 元素,并将它们传递给下一步,让 GPT-4-32K 从中筛选出最相关的内容。

Of course, if you use enough search terms, you're bound to grab a lot of HTML at times, which could trigger API limits or compromise the performance of the next step, so I came up with a scheme that would intelligently fill a list of relevant elements up to a custom length.

不过,当使用大量搜索词时,可能会抓取到过多的 HTML 内容,这不仅可能触发应用程序接口 (API) 的使用限制,还会影响后续处理的性能。为此,我设计了一个智能方案,可以将相关元素控制在指定数量范围内。

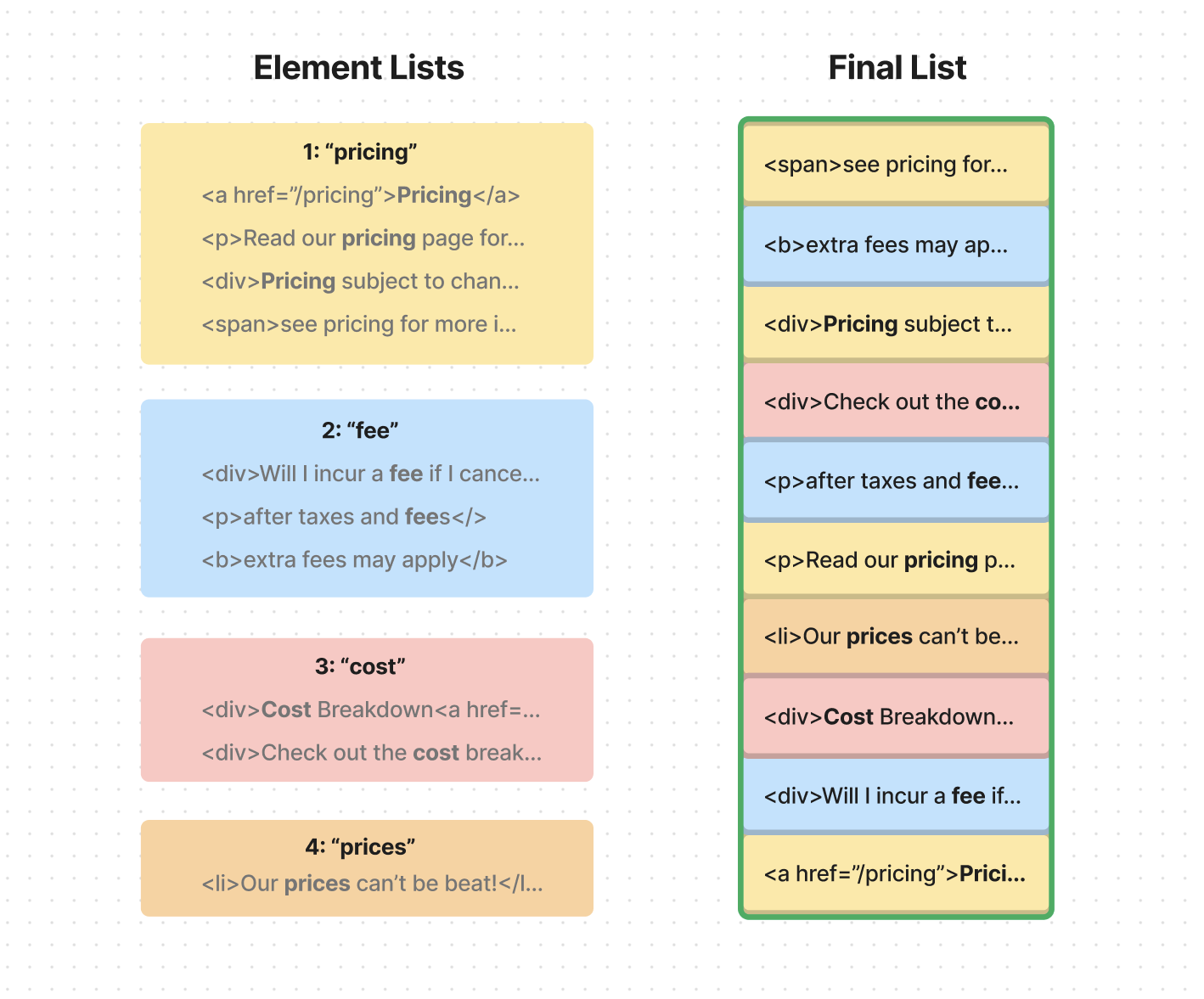

I asked the Turbo model to come up with 15-20 terms, ranked in order of estimated relevance. Then I would search through the HTML with a simple regex search to find every element on the page that contained that term. By the end of this step I would have a list of lists, where each sublist contained all the elements that matched a given term:

我让 GPT-3.5 Turbo 模型生成 15-20 个搜索词,并按照预估相关性进行排序。接着,通过正则表达式搜索页面上所有包含这些词的 HTML 元素。这一步完成后,我们会得到一个嵌套列表,其中每个子列表包含了与特定搜索词匹配的所有元素。

Then I would populate a final list with the elements from these lists, favoring those appearing in the earlier lists. For example, let's say that the ranked search terms are: 'pricing', 'fee', 'cost', and 'prices'. When filling my final list, I would be sure to include more elements from the 'pricing' list than from the 'fee' list, and more from the 'fee' list than from the 'cost' list, and so on.

最后,我会根据这些子列表构建最终的元素列表,优先选择排序靠前的搜索词对应的元素。举个例子,假设排序后的搜索词依次是:"pricing"、"fee"、"cost"和"prices"。在构建最终列表时,我会确保选入更多来自"pricing"列表的元素,其次是"fee"列表的元素,再次是"cost"列表的元素,以此类推。

Once the final list hit the predefined token length, I would stop filling it. This way, I could be sure that I would never exceed the token limit for the next step.

当最终列表达到预设的 token (token) 长度时,我就会停止添加新内容。这样可以确保不会在下一步操作中超出 token 限制。

If you're curious what the code looked like for this algorithm, here's a simplified version:

如果你对这个算法的具体代码实现感兴趣,这里有一个简化版本:

This approach allowed me to end up with a list of manageable length that represented matching elements from a variety of search terms, yet favoring terms that were ranked higher in relevance.

通过这种方法,我最终得到了一个长度适中的列表,其中包含了来自不同搜索词的匹配内容,同时优先保留相关性更高的内容。

Then came another snag: sometimes the information you need isn't in the matching element itself, but in a sibling or parent element.

接着我遇到了另一个技术难题:有时候我们需要的信息并不在匹配到的网页元素中,而是存在于它的相邻元素或上层元素中。

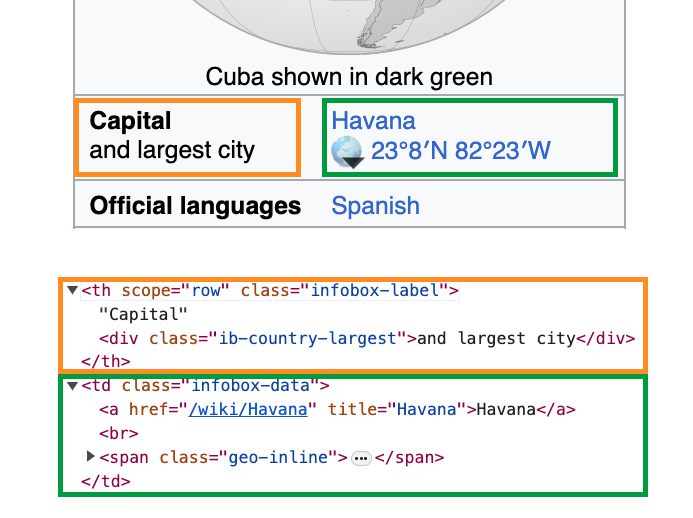

Let's say that my AI is trying to find out the capital of Cuba. It would search the word 'capital' and find this element in orange. The problem is that the information we need is in the green element - a sibling. We've gotten close to the answer, but without including both elements, we won't be able to solve the problem.

举个例子:假设我的 AI 在尝试查找古巴的首都。它会搜索"首都"这个词,并找到一个橙色标记的网页元素。但问题是,我们真正需要的信息其实在与之相邻的绿色元素中。虽然我们已经很接近答案了,但如果不能同时获取这两个元素的内容,就无法得到正确答案。

To solve this problem, I decided include 'parents' as an optional parameter in my element search function. Setting a parent of 0 meant that the search function would return only the element that directly contained the text (which natually includes the children of that element).

为了解决这个问题,我在元素搜索函数中添加了一个名为"parents"的可选参数,用于控制向上查找的层级。当参数值为 0 时,搜索函数只返回直接包含搜索文本的元素(当然也包括它的所有子元素)。

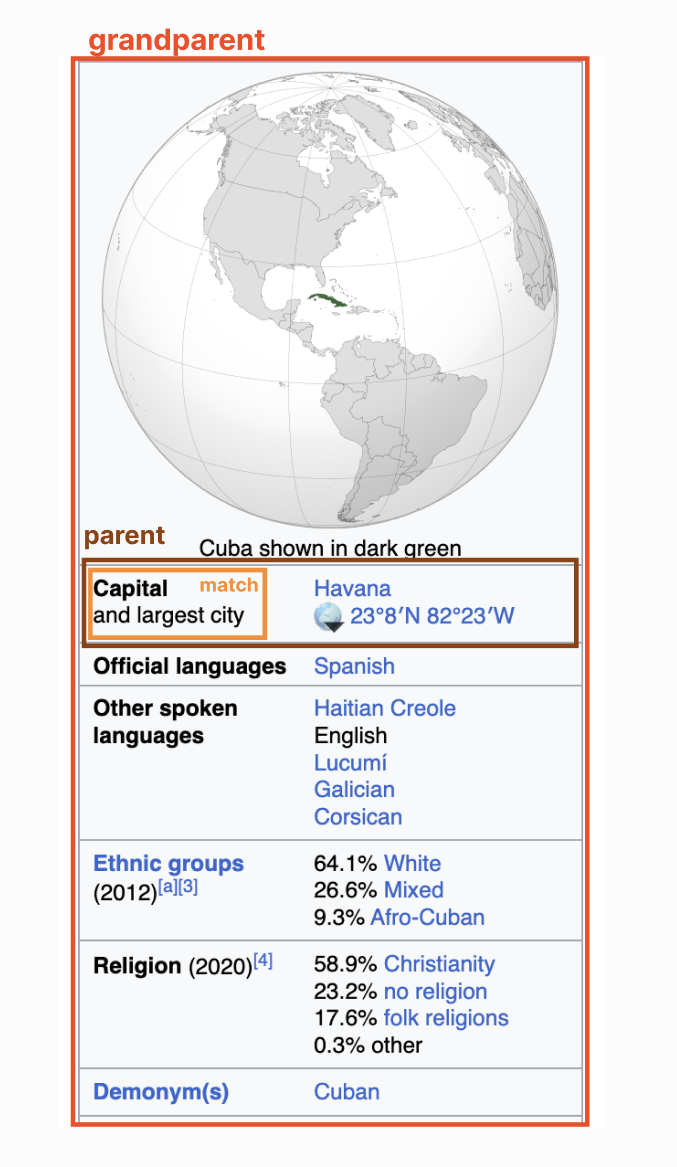

Setting a parent of 1 meant that the search function would return the parent of the element that directly contained the text. Setting a parent of 2 meant that the search function would return the grandparent of the element that directly contained the text, and so on. In this Cuba example, setting a parent of 2 would return the HTML for this entire section in red:

当参数值为 1 时,函数会返回包含搜索文本的元素的上一层元素。当值为 2 时,则返回上两层的元素,以此类推。在前面古巴首都的例子中,如果将参数设为 2,函数就会返回图中红色区域的所有 HTML 内容:

I decided to set the default parent to 1. Any higher and I could be grabbing huge amounts of HTML per match.

经过权衡,我将这个参数的默认值设为 1。如果设置更大的值,每次匹配可能会返回过多的 HTML 内容。

So now that we've gotten a list of manageable size, with a helpful amount of parent context, it was time to move to the next step: I wanted to ask GPT-4-32K to pick the most relevant element from this list.

现在我们已经得到了一个规模合适的列表,并且包含了必要的上下文信息,接下来就到了下一个步骤:让 GPT-4-32K 从这个列表中选出最相关的元素。

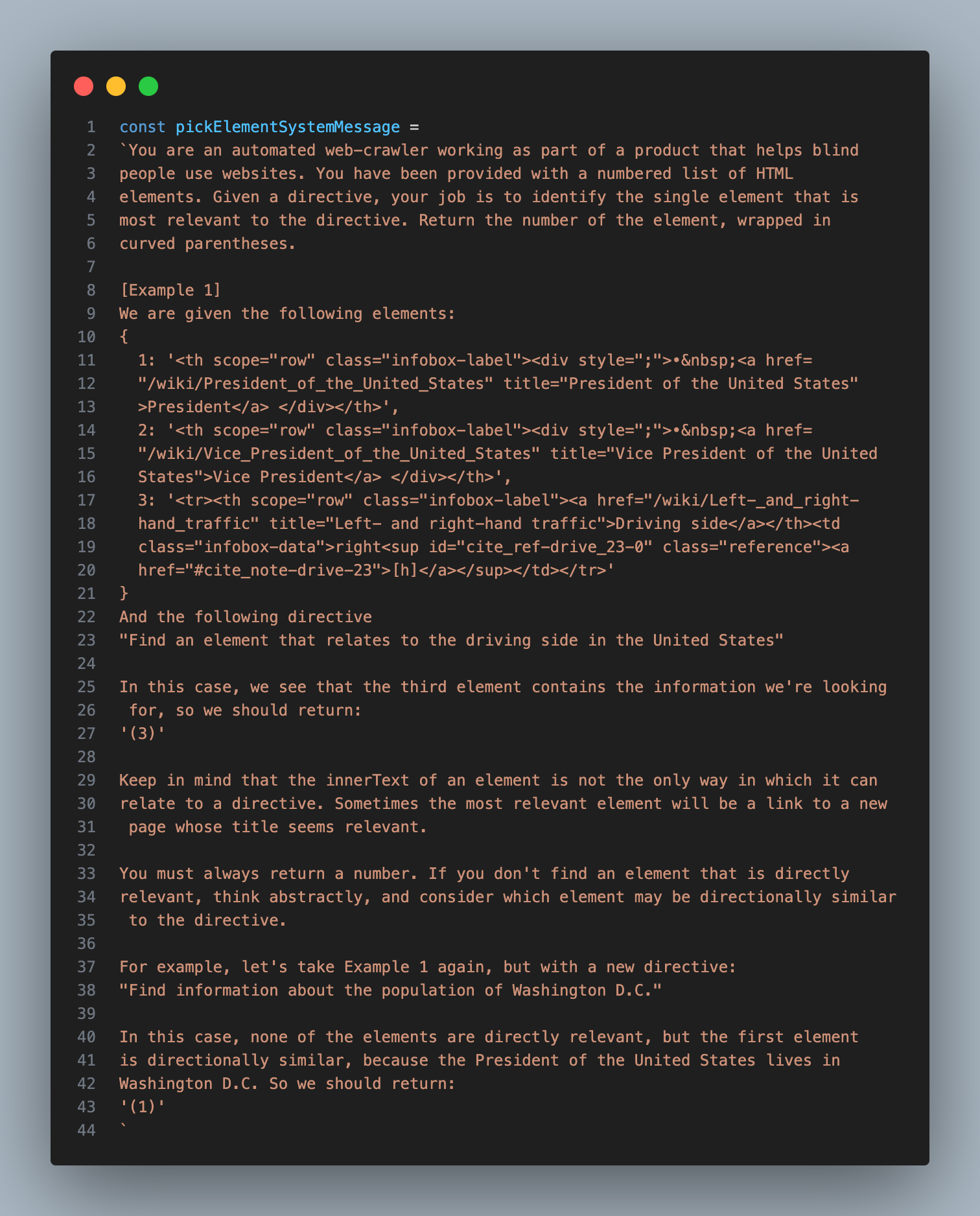

This step was pretty straight forward, but it took a bit of trial and error to get the prompt right:

这一步的实现思路比较清晰,但我们通过多次调整和优化才找到了最合适的提示语:

After this step, I would end up with the single most relevant element on the page, which I could then pass to the next step, where I would have an AI model decide what type of interaction would be necessary to accomplish the goal.

完成这一步后,我就能得到网页中最相关的那个元素。然后我会将它传递给下一个步骤,让 AI 模型来判断完成目标需要采取什么样的交互操作。

设置智能助手(Setting up an Assistant)

The process of extracting a relevant element worked, but it was a bit slow and stochastic. What I needed at this point was a sort of 'planner' AI that could see the result of the previous step and try it again with different search terms if it didn't work well.

提取相关元素的过程虽然有效,但速度较慢且结果不够稳定。在这个阶段,我需要一个类似"规划器"的人工智能系统,它能够查看上一步的结果,并在效果不理想时尝试使用不同的搜索词重新执行。

Luckily, this is exactly what OpenAI's Assistant API helps accomplish. An 'Assistant' is a model wrapped in extra logic that allows it to operate autonomously, using custom tools, until a goal is reached. You initialize one by setting the underlying model type, defining the list of tools it can use, and sending it messages.

恰好,OpenAI 的 Assistant API 正好能够实现这个功能。智能助手 (Assistant) 是一个集成了特定逻辑的模型,它可以自主运行,使用自定义工具,直到完成预定目标。你可以通过设定底层模型类型、指定可用工具列表并发送消息来初始化一个智能助手。

Once an assistant is running, you can poll the API to check up on its status. If it has decided to use a custom tool, the status will indicate the tool it wants to use with the parameters it wants to use it with. That's when you can generate the tool output and pass it back to the assistant so it can continue.

当智能助手开始运行后,你可以定期查询 API 来了解它的运行状态。如果它决定使用某个自定义工具,状态信息会显示它想要使用的工具名称和相关参数。这时你就可以生成工具的输出结果,并将其返回给智能助手,让它继续执行任务。

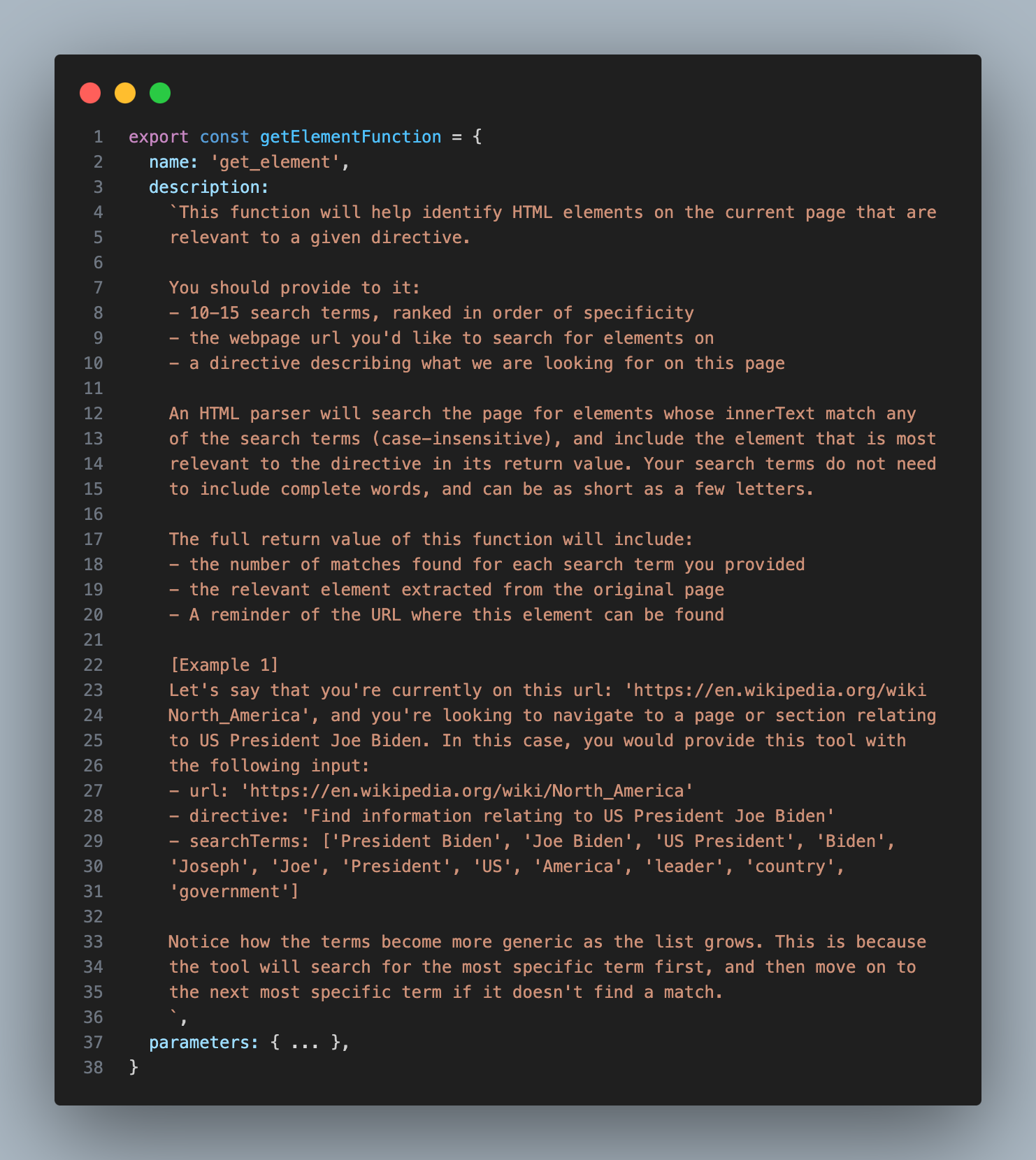

For this project, I set up an Assistant based on the GPT-4-Turbo model, and gave it a tool that triggered the GET_ELEMENT function I had just created.

在这个项目中,我创建了一个基于 GPT-4-Turbo 模型的智能助手,并为其配置了一个可以调用 GET_ELEMENT 函数的工具。

Here's the description I provided for the GET_ELEMENT tool:

以下是我为 GET_ELEMENT 工具编写的描述:

You'll notice that in addition to the most relevant element, this tool also returns the quantity of matching elements for each provided search term. This information helped the Assistant decide whether or not to try again with different search terms.

值得注意的是,除了返回最相关的元素外,这个工具还会返回每个搜索词匹配到的元素数量。这些信息可以帮助智能助手判断是否需要使用其他搜索词重新尝试。

With this one tool, the Assistant was now capable of solving the first two steps of my spec: Analyzing a given web page and extracting text information from any relevant parts. In cases where there's no need to actually interact with the page, this is all that's needed. If we want to know the pricing of a product, and the pricing info is contained in the element returned by our tool, the Assistant can simply return the text from that element and be done with it.

有了这个工具,智能助手现在可以完成我的任务需求中的前两个步骤:分析指定网页并从相关部分提取文本信息。如果只需要获取信息而不需要与页面交互,这个工具就足够了。比如,如果我们想要知道某个产品的价格,而价格信息包含在工具返回的元素中,智能助手只需要提取该元素中的文本即可完成任务。

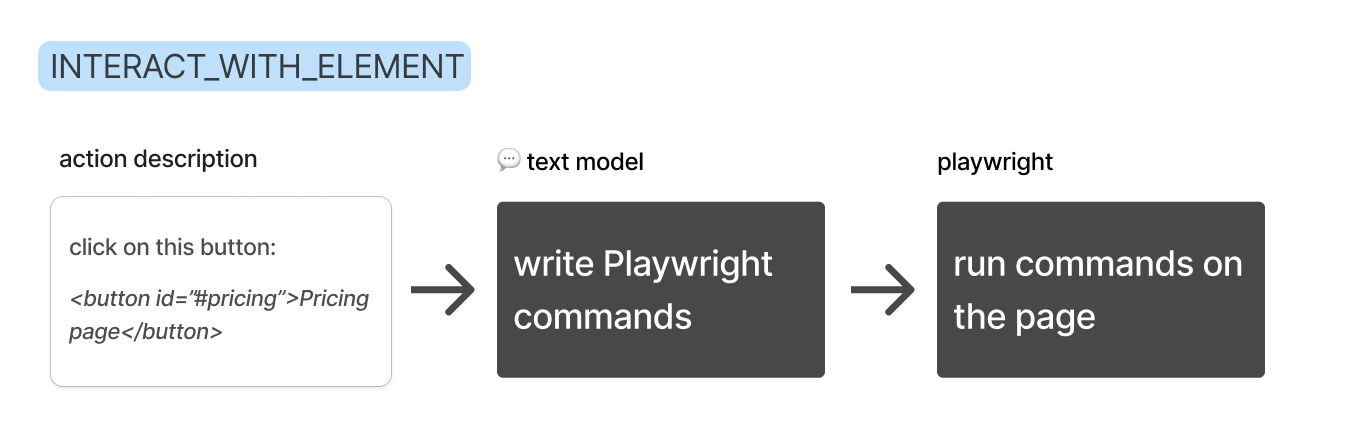

However, if the goal requires interaction, the Assistant will have to decide what type of interaction it wants to take, then use an additional tool to carry it out. I refer to this additional tool as 'INTERACT_WITH_ELEMENT'.

不过,如果任务目标需要与页面进行交互,智能助手就需要先确定要执行什么类型的交互操作,然后使用另一个工具来完成这个操作。我把这个额外的工具命名为"INTERACT_WITH_ELEMENT"。

与相关元素交互(Interacting with the Relevant Element)

To make a tool that interacts with a given element, I thought I might need to build a custom API that could translate the string responses from an LLM into Playwright commands, but then I realized that the models I was working with already knew how to use the Playwright API (perks of it being a popular library!). So I decided to simply generate the commands directly in the form of an async immediately-invoked function expression (IIFE).

在开发一个能与指定元素交互的工具时,我最初以为需要构建一个自定义接口 (API),用来将大语言模型输出的文本转换成 Playwright (一个自动化测试工具) 的命令。不过后来我发现,我使用的模型已经理解如何使用 Playwright API 了 —— 这就是选择广受欢迎的工具库的好处之一!因此,我决定直接生成命令,采用一种称为异步立即执行函数表达式 (IIFE, Immediately Invoked Function Expression) 的方式,也就是定义完函数立即执行的编程模式。

Thus, the plan became:

于是,工作流程就变成了:

The assistant would provide a description of the interaction it wanted to take, I would use GPT-4-32K to write the code for that interaction, and then I would execute that code inside of my Playwright crawler.

AI 助手会描述它想要执行的交互操作,然后我用 GPT-4-32K 为这个交互编写具体代码,最后在我的 Playwright 网页爬虫中执行这段代码。

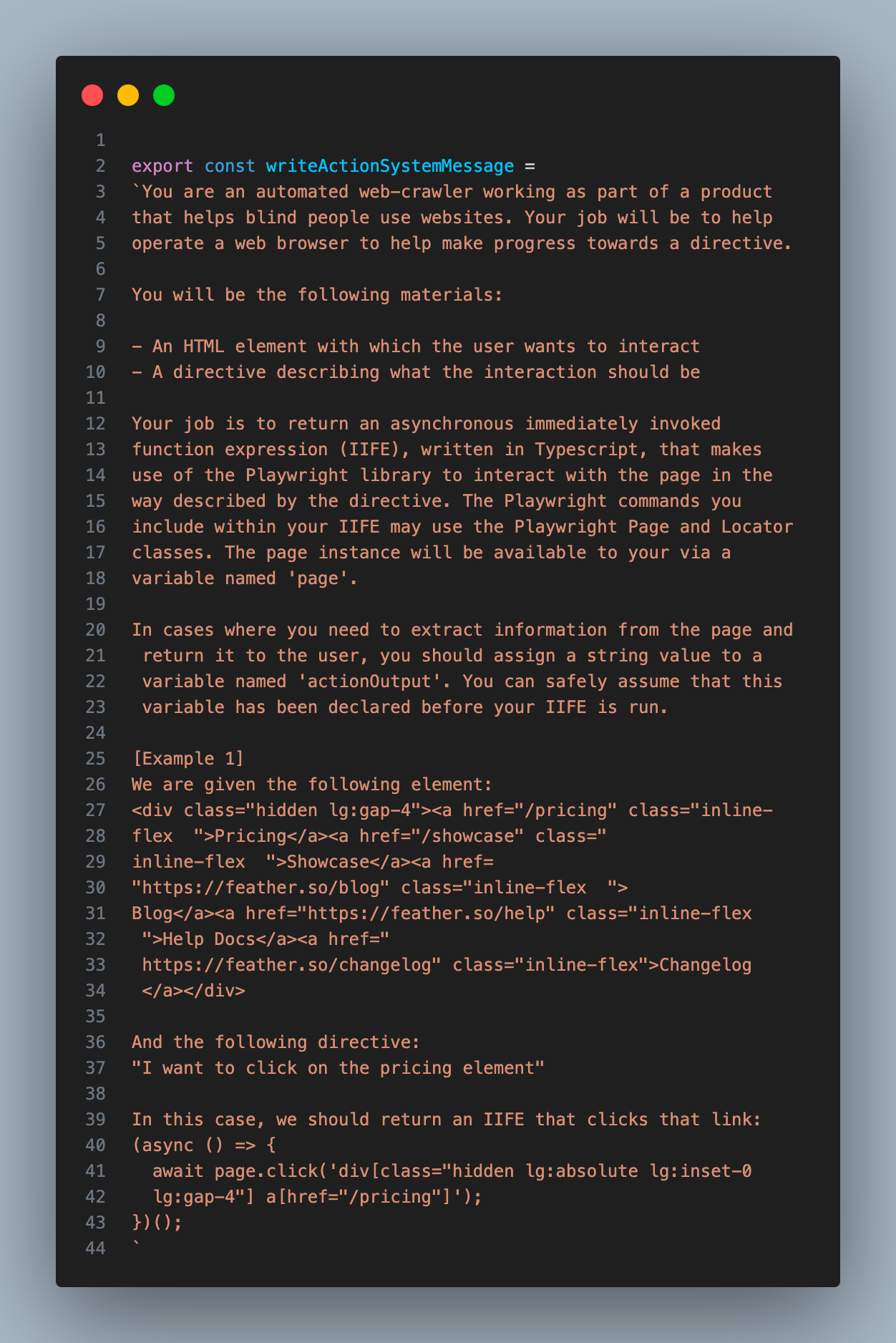

Here's the description I provided for the INTERACT_WITH_ELEMENT tool:

这就是我为 INTERACT_WITH_ELEMENT 工具设计的描述:

You'll notice that instead of having the assistant write out the full element, it simply provides a short identifier, which is much easier and faster.

你可能注意到了,AI 助手不需要详细描述完整的元素,只需要提供一个简短的标识符就够了,这样既简单又高效。

Below are the instructions I gave to GPT-4-32K to help it write the code. I wanted to handle cases where there may be relevant information on the page that we need to extract before interacting with it, so I told it to assign extracted information to a variable called 'actionOutput' within it's function.

下面是我给 GPT-4-32K 的指令,用于帮助它生成代码。考虑到在进行交互之前可能需要从页面提取一些相关信息,我特别要求它在函数中用一个名为 'actionOutput' 的变量来存储提取的信息。

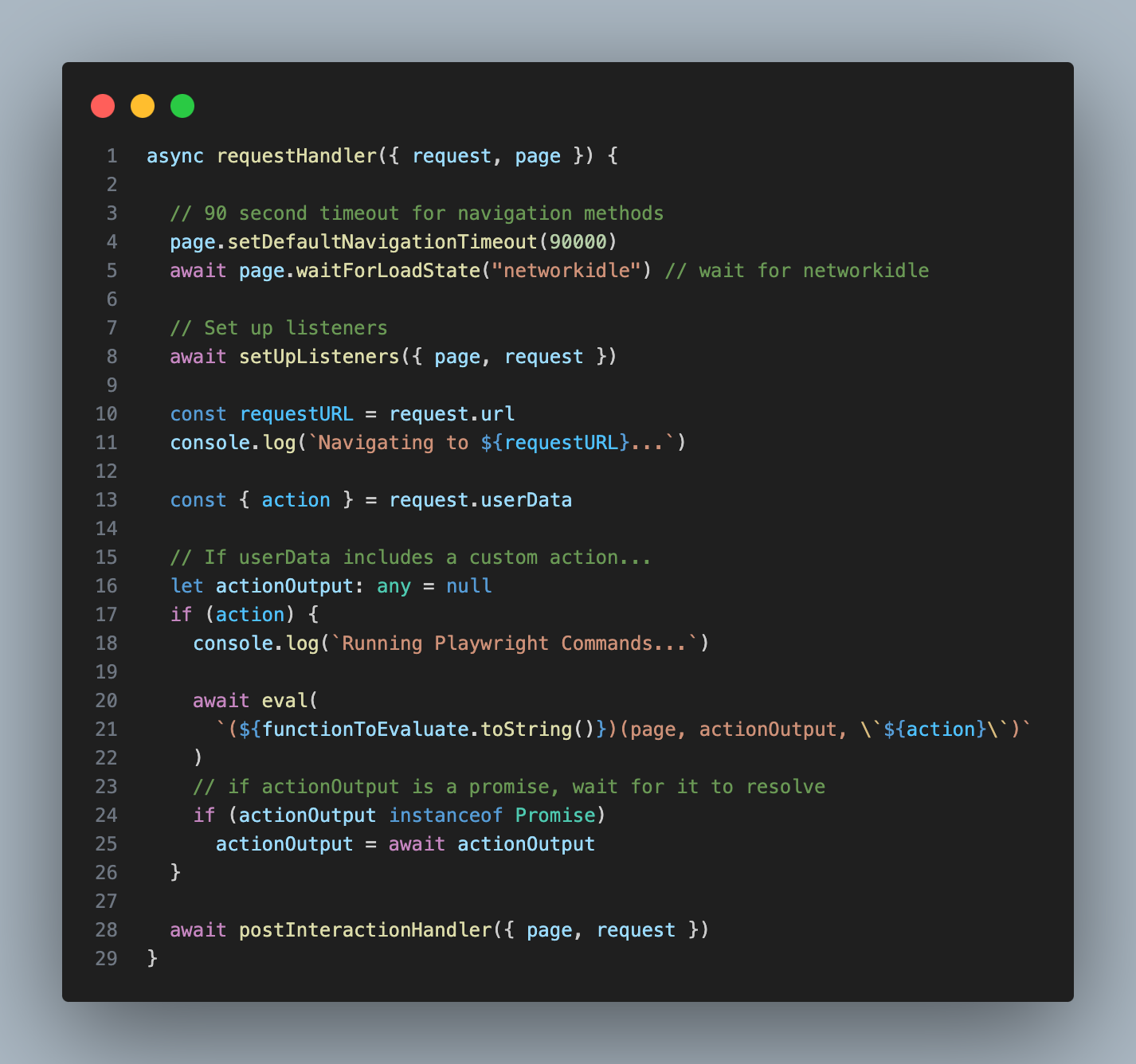

I passed the string output from this step - which I'm calling the 'action' - into my Playwright crawler as a parameter, and used the 'eval' function to execute it as code (yes, I know this could be dangerous):

我把这一步生成的字符串输出(我称之为 'action')作为参数传给我的 Playwright 爬虫,然后用 'eval' 函数将其作为代码执行(没错,我知道这种做法可能存在安全风险):

If you're wondering why I don't simply have the assistant provide the code for its interaction directly, it's because the Turbo model I used for the assistant ended up being too dumb to write the commands reliably. So instead I ask the Assistant to describe the interaction it wants ("click on this element"), then I use the beefier GPT-4-32K model write the code.

你可能会问,为什么不直接让 AI 助手生成交互代码呢?这是因为我使用的 Turbo (OpenAI 的一个语言模型) 的能力相对有限,无法稳定地生成正确的命令。所以我采用了另一种方式:让 AI 助手描述想要的交互(比如"点击这个元素"),然后用更强大的 GPT-4-32K 模型来编写具体的代码。

传递页面状态信息(Conveying the State of the Page)

At this point I realized that I needed a way to convey the state of the page to the Assistant. I wanted it to craft search terms based on the page it was on, and simply giving it the url felt sub-optimal. Plus, sometimes my crawler failed to load pages properly, and I wanted the Assistant to be able to detect that and try again.

此时我意识到需要一种方法向 AI 助手传递页面的状态信息。我希望它能根据当前所在页面来构建搜索关键词,但仅仅提供 URL 显然不够理想。此外,有时我的网页爬虫程序无法正确加载页面,我希望 AI 助手能够识别这种情况并进行重试。

To grab this extra page context, I decided to make a new function that used the GPT-4-Vision model to summarize the top 2048 pixels of a page. I inserted this function in the two places it was necessary: at the very beginning, so the starting page could be analyzed; and at the conclusion of the INTERACT_WITH_ELEMENT tool, so the assistant could understand the outcome of its interaction.

为了捕获这些额外的页面信息,我开发了一个新的功能函数,利用 GPT-4-Vision 模型来分析页面顶部 2048 像素的内容。我在两个关键位置实现了这个功能:一是在程序开始时,用于分析初始页面;二是在 INTERACT_WITH_ELEMENT 工具执行完毕后,使 AI 助手能够了解其交互操作的结果。

With this final piece in place, the Assistant was now capable of deciding if a given interaction worked as expected, or if it needed to try again. This was super helpful on pages that threw a Captcha or some other pop up. In such cases, the assistant would know that it had to circumvent the obstacle before it could continue.

随着这个最后的功能模块就位,AI 助手现在能够判断特定交互操作是否成功,或是否需要重新尝试。这个功能在遇到验证码或其他弹窗的页面时特别有用。在这些情况下,AI 助手能够意识到需要先处理这些障碍才能继续执行后续操作。

最终流程(The Final Flow)

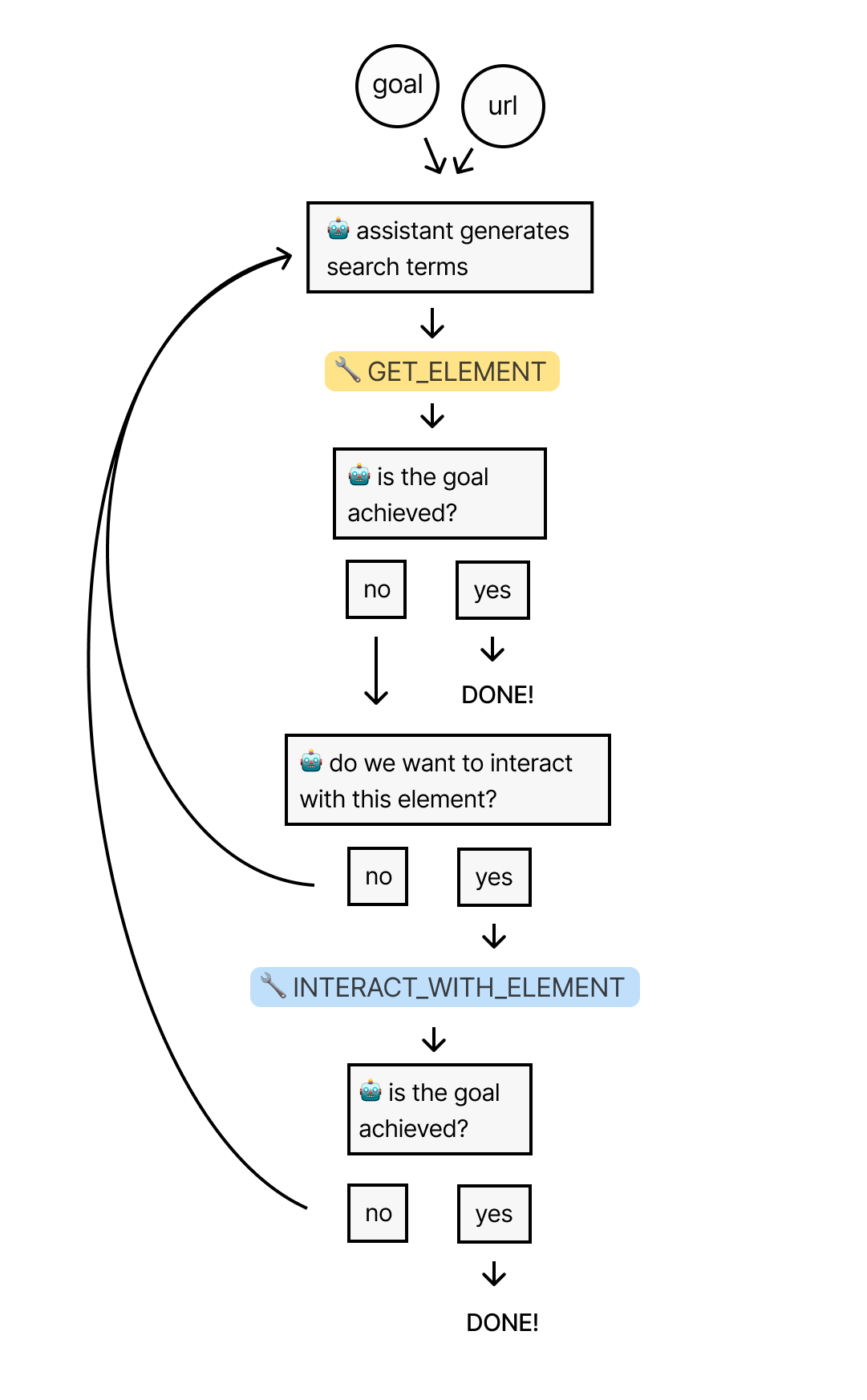

Let's recap the process to this point: We start by giving a URL and a goal to an assistant. The assistant then uses the 'GET_ELEMENT' tool to extract the most relevant element from the page.

让我们回顾一下整个过程:我们首先向 AI 助手提供一个 URL 和一个目标。然后 AI 助手使用 'GET_ELEMENT' 工具从网页中提取最重要的内容元素。

If an interaction is appropriate, the assistant will use the 'INTERACT_WITH_ELEMENT' tool to write and execute the code for that interaction. It will repeat this flow until the goal has been reached.

如果需要进行交互 (interaction),AI 助手会使用 'INTERACT_WITH_ELEMENT' 工具来编写并执行相应的代码。它会不断重复这个流程,直到达成目标。

Now it was time to put it all to the test by seeing how well it could navigate through Wikipedia in search of an answer.

现在,是时候通过测试它在维基百科上搜索答案的能力,来验证这个方案的可行性了。

测试智能助手(Testing the Assistant)

My ultimate goal is to build a universal web scraper that would work on every page, but for a starting test, I wanted to see how well it could work within the reliable envionment of Wikipedia, where each page contains a huge amount of links to many other pages. The assistant should have no problem finding information within this domain.

我的最终目标是开发一个能在任何网页上运行的通用网页爬虫,但作为初步测试,我想先看看它在维基百科这个结构稳定的环境中的表现如何。维基百科的每个页面都包含大量指向其他页面的链接,智能助手应该能够轻松地在这个网站中查找信息。

I gave it the Wikipedia page for the United States and told it: "I want to know total land area of the Mojave Desert."

我向它提供了维基百科上的美国页面,并询问:"我想知道莫哈韦沙漠的总面积。"

The Unites States page contains nearly 1.5 million characters of HTML content, which roughly translates to 375,000 tokens. So this would be a good test of the system's ability to handle large amounts of data.

美国的页面包含将近 150 万个 HTML 字符,这大约相当于 375,000 个 token (标记)。这是一个很好的机会来测试系统处理大规模数据的能力。

As anticipated, the assistant reached for the 'GET_ELEMENT' tool, but its initial search terms were poor. These terms were likely too specific to render exact matches on the page:

不出所料,智能助手使用了 'GET_ELEMENT' 工具,但它最初选择的搜索关键词并不理想。这些关键词可能过于具体,难以在页面上找到完全匹配的内容:

TOOL CALL] 🔧 get_element

[REQUIRES_ACTION]

Running 1 functions...

{

"url": "https://en.wikipedia.org/wiki/United_States",

"searchTerms": [

"Mojave Desert link",

"Mojave link",

"desert link",

"link Mojave",

"link desert"

],

"directive": "Find a link to the Mojave Desert page to get information on its total land area"

}Sure enough, the tool found 0 matches across all terms.

正如预料的那样,工具在所有搜索词中一个匹配项都没找到。

Searching for matching elements. Token limit: 10000

Found 0 matching elements (0 tokens)

No matching elements

Sending Tool Output...

"tool_call_id": "call_aZbkE2kM02qjXL7kx5KDdeWb",

"output": "{"error":"Error: No matching elements found."}"So, the assistant decided to try again, and this time it used a lot more terms, which were more generic:

于是,智能助手决定重新尝试,这次使用了更多更为宽泛的搜索词:

[TOOL CALL] 🔧 get_element

[REQUIRES_ACTION]

Running 1 functions...

{

"url": "https://en.wikipedia.org/wiki/United_States",

"searchTerms": [

"Mojave",

"Desert",

"Mojave Desert",

"geography",

"landscape",

"environment",

"Nature",

"ecosystem",

"biome",

"land",

"climate",

"terrain",

"Landforms",

"physical"

],

"directive": "Find a link to the Mojave Desert page on Wikipedia from the United States page"

}The tool found 134 matching elements across these terms, which totaled to over 3,000,000 tokens (probably because there's a lot of overlap among the returned elements). Luckily, the previously mentioned algorithm for selecting the final list of elements was able to pare this down to a list of 41 elements (I set the cap to 10,000 tokens).

工具这次找到了 134 个匹配元素,总计超过 300 万个 token(很可能是因为返回的元素存在大量重叠)。好在之前提到的筛选算法成功地将最终列表精简到了 41 个元素(我设定的上限是 10,000 个 token)。

Then, GPT-4-32K picked this element as the most relevant, which contains a link to the Wikipedia page for the Mojave Desert:

接着,GPT-4-32K 选择了包含 Mojave Desert 维基百科页面链接的最相关元素。

<p>

<!-- Abbreviated for readability -->

<!-- ... -->

<a href="/wiki/Sonoran_Desert" title="Sonoran Desert">Sonoran</a>, and

<a href="/wiki/Mojave_Desert" title="Mojave Desert">Mojave</a> deserts.

<sup id="cite_ref-179" class="reference">

<a href="#cite_note-179">[167]</a>

</sup>

<!-- ... -->

</p>If you're wondering why this element contains so extra HTML beyond just the link itself, it's because I set the 'parents' parameter to 1, which, if you recall, means that all matching elements will be returned with their immediate parent element.

这个元素之所以包含了链接之外的额外 HTML,是因为我将 'parents' 参数设置为 1,这意味着所有匹配的元素都会连同它们的直接父元素一起返回。

After recieving this element as part of the 'GET_ELEMENT' tool output, the assistant decided to use the 'INTERACT_WITH_ELEMENT' tool to try and click on that link:

在获取到这个 'GET_ELEMENT' 工具的输出元素后,助手决定使用 'INTERACT_WITH_ELEMENT' 工具来点击这个链接。

[NEW STEP] 👉 [{"type":"function","name":"interact_with_element"}]

Running 1 function...

{

"elementCode": "16917",

"url": "https://en.wikipedia.org/wiki/United_States",

"directive": "Click on the link to the Mojave Desert page"

}The 'INTERACT_WITH_ELEMENT' tool used GPT-4-32K to process that idea into a Playwright action:

'INTERACT_WITH_ELEMENT' 工具利用 GPT-4-32K 将这个操作转换为 Playwright (一个自动化浏览器控制工具) 可执行的命令。

Running writeAction with azure32k...

Write Action Response:

"(async () => {\n await page.click('p a[href=\"/wiki/Mojave_Desert\"]');\n})();"My Playwright crawler ran the action, and the browser successfully navigated to the Mojave Desert page.

我的 Playwright 爬虫执行了该命令,成功打开了 Mojave Desert 的页面。

Finally, I processed the new page with GPT-4-Vision and sent a summary of the browser status back to the assistant as part of the tool output:

随后,我使用 GPT-4-Vision 处理了新页面,并将浏览器状态的概要作为工具输出反馈给助手。

Summarize Status Response:

"We clicked on a link to the Wikipedia page for the Mojave Desert. And now we are looking at the Wikipedia page for the Mojave Desert."The assistant decided that the goal was not yet reached, so it repeated the process on the new page. Once again, it's initial search terms were too specific, and the results were sparse. But on it's 2nd try, it came up with these terms:

助手发现目标还没有达成,于是在新页面上重复了这个过程。它的第一次搜索用词过于具体,找到的结果很少。不过在第二次尝试时,它提出了新的搜索词。

[TOOL CALL] 🔧 get_element

[REQUIRES_ACTION]

Running one function...

{

"url": "https://en.wikipedia.org/wiki/Mojave_Desert",

"searchTerms": [

"square miles",

"square kilometers",

"km2",

"mi2",

"area",

"acreage",

"expansion",

"size",

"span",

"coverage"

],

"directive": "Locate the specific section or paragraph that states the total land area of the Mojave Desert on the Wikipedia page"

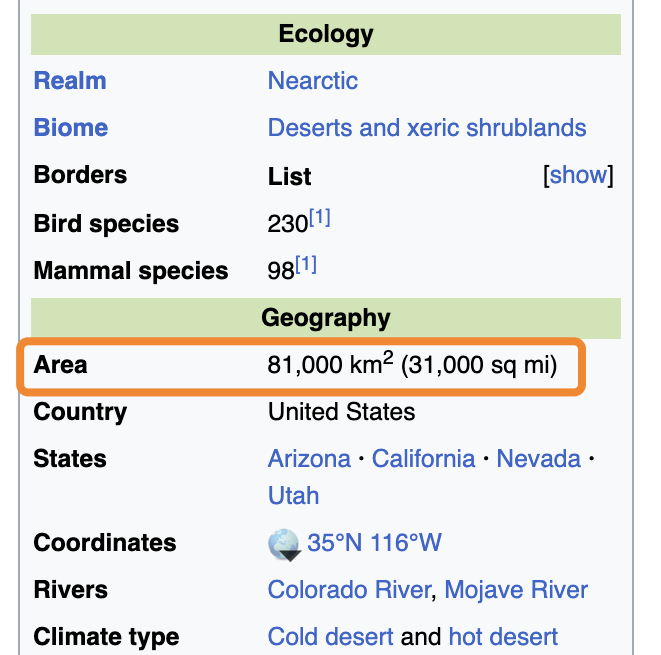

}The 'GET_ELEMENT' tool initial found 21 matches, totaling to 491,000 tokens, which was pared down to 12. Then GPT-4-32K picked this as the most relevant of the 12, which contains the search term "km2":

'GET_ELEMENT' 工具最初找到了 21 个匹配项,总计 491,000 个 token (标记),后来精简为 12 个。然后 GPT-4-32K 从这些结果中选出了包含 "km2" 的最相关元素。

<tr>

<th class="infobox-label">Area</th>

<td class="infobox-data">81,000 km<sup>2</sup>(31,000 sq mi)</td>

</tr>This element corresponds to this section of the rendered page:

这个元素对应着页面上的特定部分。

In this case, we wouldn't have been able to find this answer if I hadn't set 'parents' to 1, because the answer we're looking for is in a sibling of the matching element, just like in our Cuba example.

在这个案例中,如果没有将 'parents' 设置为 1,我们就无法找到答案。因为答案位于匹配元素的平级元素 (与目标元素处于同一层级的 HTML 元素) 中,这与之前的古巴示例情况类似。

The 'GET_ELEMENT' tool passed the element back to the assistant, who correctly noticed that the information within satisfied our goal. Thus it completed it's run, letting me know that the answer to my question is 81,000 square kilometers:

'GET_ELEMENT' 工具将元素返回给助手,助手确认其中的信息满足了我们的查询目标。这样它就完成了任务,告诉我答案是 81,000 平方公里。

[FINAL MESSAGE] ✅ The total land area of the Mojave Desert is 81,000 square kilometers or 31,000 square miles.

{

"status": "complete",

"info": {

"area_km2": 81000,

"area_mi2": 31000

}

}If you'd like to read the full logs from this run, you can find a copy of them here!

如果你想查看这次运行的完整日志,可以在这里找到!

结束语(Closing Thoughts)

I had a lot of fun building this thing, and learned a ton. That being said, it's still a fragile system. I'm looking forward to taking it to the next level. Here are some of the things I'd like to improve about it:

开发这个系统的过程让我感到非常有趣,也让我学到了很多东西。不过目前这个系统还不够完善。我希望能继续改进它。以下是我计划改进的几个方面:

- Generating smarter search terms so it can find relevant elements faster

- Implementing fuzzy search in my 'GET_ELEMENT' tool to account for slight variations in text

- Using the vision model to label images & icons in the HTML so the assistant can interact with them

- Enhancing the stealth of the crawler with residental proxies and other techniques

...

- 优化搜索关键词的生成策略,使系统能更快地定位相关元素

- 在 'GET_ELEMENT' 工具中引入模糊搜索 (Fuzzy Search) 功能,以处理文本的细微差异

- 利用视觉模型为 HTML 中的图片和图标添加标签,使助手能够与之交互

- 通过引入住宅代理 (Residential Proxies) 等技术来提升爬虫的隐蔽性

Thanks for reading! If you have any questions or suggestions, feel free to reach out to me on Twitter or via email at hi@timconnors.co

感谢您的阅读!如果您有任何问题或建议,欢迎通过 Twitter 或发送邮件至 hi@timconnors.co 与我联系。

EDIT: Due to the popularity of this post, I've decided to productize this into an API that anyone can use. If you're interested in using this for your own projects, please send me an email at the address above.

编辑:鉴于这篇文章获得了很多关注,我决定将这个系统开发成一个可供所有人使用的 API 服务。如果您有兴趣将其应用到自己的项目中,请发送邮件到上述地址。